Most Unix commands will show a short list of the most-helpful flags if you use --help or -h.

Most Unix commands will show a short list of the most-helpful flags if you use --help or -h.

sed can do a bunch of things, but I overwhelmingly use it for a single operation in a pipeline: the s// operation. I think that that’s worth knowing.

sed 's/foo/bar/'

will replace all the first text in each line matching the regex “foo” with “bar”.

That’ll already handle a lot of cases, but a few other helpful sub-uses:

sed 's/foo/bar/g'

will replace all text matching regex “foo” with “bar”, even if there are more than one per line

sed 's/\([0-9a-f]*\)/0x\1/g

will take the text inside the backslash-escaped parens and put that matched text back in the replacement text, where one has ‘\1’. In the above example, that’s finding all hexadecimal strings and prefixing them with ‘0x’

If you want to match a literal “/”, the easiest way to do it is to just use a different separator; if you use something other than a “/” as separator after the “s”, sed will expect that later in the expression too, like this:

sed 's%/%SLASH%g

will replace all instances of a “/” in the text with “SLASH”.

I would generally argue that rsync is not a backup solution.

Yeah, if you want to use rsync specifically for backups, you’re probably better-off using something like rdiff-backup, which makes use of rsync to generate backups and store them efficiently, and drive it from something like backupninja, which will run the task periodically and notify you if it fails.

rsync: one-way synchronization

unison: bidirectional synchronization

git: synchronization of text files with good interactive merging.

rdiff-backup: rsync-based backups. I used to use this and moved to restic, as the backupninja target for rdiff-backup has kind of fallen into disrepair.

That doesn’t mean “don’t use rsync”. I mean, rsync’s a fine tool. It’s just…not really a backup program on its own.

OOMs happen because your system is out of memory.

You asked how to know which process is responsible. There is no correct answer to which process is “wrong” in using more memory — all one can say is that processes are in aggregate asking for too much memory. The kernel tries to “blame” a process and will kill it, as you’ve seen, to let your system continue to function, but ultimately, you may know better than it which is acting in a way you don’t want.

It should log something to the kernel log when it OOM kills something.

It may be that you simply don’t have enough memory to do what you want to do. You could take a glance in top (sort by memory usage with shift-M). You might be able to get by by adding more paging (swap) space. You can do this with a paging file if it’s problematic to create a paging partition.

EDIT: I don’t know if there’s a way to get a dump of processes that are using memory at exactly the instant of the OOM, but if you want to get an idea of what memory usage looks at at that time, you can certainly do something like leave a top -o %MEM -b >log.txt process running to get a snapshot every two seconds of process memory use. top will print a timestamp at the top of each entry, and between the timestamped OOM entry in the kernel log and the timestamped dump, you should be able to look at what’s using memory.

There are also various other packages for logging resource usage that provide less information, but also don’t use so much space, if you want to view historical resource usage. sysstat is what I usually use, with the sar command to view logged data, though that’s very elderly. Things like that won’t dump a list of all processes, but they will let you know if, over a given period of time, a server is running low on available memory.

I’m the other way. I’d rather have battery life on cell phones, and turn the refresh rate down.

On a desktop, where the power usage is basically irrelevant, then sure, I’ll crank the refresh rate way up. One of the most-immediately-noticeable things is the mouse pointer, and that doesn’t exist on touch interfaces.

Ah, gotcha. Just for the record — though it doesn’t really matter as regards your point, because I was incorrectly assuming that you were using it as an example of with something with Firewire onboard — there are apparently two different products:

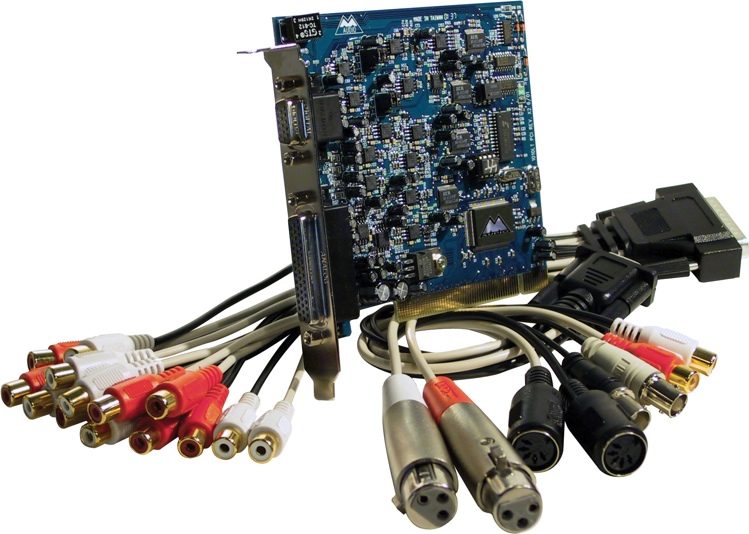

The 1010 has a PCI card, but it talks to an external box:

The 1010LT has a PCI card alone, no external box, and then a ton of cables that fan out directly from the card:

Neither appears to have a Firewire interface. IIRC, the 1010LT was less expensive, was the one I was using.

I have a feeling its mostly due to some audio and video hardware that has some real longevity. I’ve got a VHS+minidv player that I am transferring old videos from using FireWire (well, for the minidv. VHS is s-video capture).

Yeah, that’s a thought…though honestly, unless whatever someone is doing requires real-time processing and adding latency is a problem, they can probably pass it through some other old device that can speak both Firewire and something else.

Probably the m-audio delta 1010

That doesn’t have a Firewire interface, does it? I thought I had one of those.

checks

Oh, I’m thinking of the 1010LT, not the 1010. That lives on a PCI card.

If it’s Linux, sounds like it should just work out of box, at least for a while longer.

https://www.tomshardware.com/news/linux-to-support-firewire-until-2029

Linux to Support Firewire Until 2029

The ancient connectivity standard still has years of life ahead of it.

Firewire is getting a new lease on life and will have extended support up to 2029 on Linux operating systems. Phoronix reports that a Linux maintainer Takashi Sakamoto has volunteered to oversee the Firewire subsystem for Linux during this time, and will work on Firewire’s core functions and sound drivers for the remaining few that still use the connectivity standard.

Further, Takashi Sakamoto says that his work will help users transition from Firewire to more modern technology standards (like perhaps USB 2.0). Apparently, Firewire still has a dedicated fanbase that is big enough to warrant six more years of support. But we suspect this will be the final stretch for Firewire support, surrounding Linux operating systems. Once 2029 comes around, there’s a good chance Firewire will finally be dropped from the Linux kernel altogether.

Ah. Thanks for the context.

Well, after they have product out, third parties will benchmark them, and we’ll see how they actually stack up.

Just 1 in 4 Brits think the UK is viewed positively on the world stage, with most wanting their country to play a large role in international affairs, exclusive poll shows

I — American — view the UK positively in international affairs, but frankly, if you’re comparing the UK to its recent history:

The UK itself has grown, but a lot of international influence came from the UK being, globally, at the leading edge of the Industrial Revolution. That’s a discovery-of-fire level event, a pretty rare situation in human history.

That was a major part of the Great Divergence; the UK was highly developed, and pulled wildly more than its weight in per capita terms.

If the bar that a Briton is setting is relative to the UK’s international role over the past couple centuries, that’s a high bar to set, because the UK had extraordinary influence in the world in that period. That’s not because the UK’s economy has become weaker, but because the world has been economically converging; less-developed countries have been catching up. I’d say that the UK definitely punches well above its weight in population terms internationally today, and is probably relatively-engaged. Could it do more? Well, I’m sure it could. But I don’t really think of the UK as especially isolationist. Name another country of 70 million that independently has as large an impact internationally.

Yeah, I saw, but it’s an interesting topic.

Is your concern compromise of your data or loss of the server?

My guess is that most burglaries don’t wind up with people trying to make use of the data on computers.

As to loss, I mean, do an off-site backup of stuff that you can’t handle losing and in the unlikely case that it gets stolen, be prepared to replace hardware.

If you just want to keep the hardware out of sight and create a minimal barrier, you can get locking, ventillated racks. I don’t know how cost-effective that is; I’d think that that might cost more than the expected value of the loss from theft. If a computer costs $1000 and you have a 1% chance of it being stolen, you should not spend more than $10 on prevention in terms of reducing cost of hardware loss, even if that method is 100% effective.

Mantraps that use deadly force are illegal in the United States, and in notable tort law cases the trespasser has successfully sued the property owner for damages caused by the mantrap. There is also the possibility that such traps could endanger emergency service personnel such as firefighters who must forcefully enter such buildings during emergencies. As noted in the important American court case of Katko v. Briney, “the law has always placed a higher value upon human safety than upon mere rights of property”.[5]

EDIT: I’d add that I don’t know about the “life always takes precedence over property” statement; Texas has pretty permissive use of deadly force in defense of property. However, I don’t think that anywhere in the US permits traps that make use of deadly force.

You might want to list the platform you want to use it on. I’m assuming that you’re wanting to access this on a smartphone of some sort?

Mulvad apparently uses Wireguard. Is there an Android Wireguard client that supports multiple VPNs and toggling each independently?

Datacentres also rely on water, to help cool the humming banks of hardware. In the UK the Environment Agency, which was already warning about a future water shortfall for homes and farming, recently conceded the rapid expansion of AI had made it impossible to forecast future demand.

Research carried out by Google found that fulfilling a typical prompt entered into its AI assistant Gemini consumed the equivalent of five drops of water – as well as energy equivalent to watching nine seconds of TV.

I mean, the UK could say “we won’t do parallel-processing datacenters”. If truly and honestly, there are hard caps on water or energy specific to the UK that cannot be dealt with, that might make sense.

But I strongly suspect that virtually all applications can be done at a greater distance. Something like an LLM chatbot is comparatively latency-tolerant for most uses — it doesn’t matter whether it’s some milliseconds away — and does not have high bandwidth requirements to the user. If the datacenters aren’t placed in the UK, my assumption is that they’ll be placed somewhere else. Mainland Europe, maybe.

Also, my guess is that water is probably not an issue, at least if one considers the UK as a whole. I had a comment a bit back pointing out that the River Tay — Scotland as a whole, in fact — doesn’t have a ton of datacenters near it the way London does, and has a smaller population around it than does the Thames. If it became necessary, even if it costs more to deal with, it should be possible to dissipate waste heat by evaporating seawater rather than freshwater; as as an archipeligo, nearly all portions of the UK are not far from an effectively-unlimited supply of seawater.

And while the infrastructure for it doesn’t widely exist today, it’s possible to make constructive use of heat, too, like via district heat driven off waste heat; if you already have a city that is a radiator (undesirably) bleeding heat into the environment, having a source of heat to insert into it can be useful.:

https://www.weforum.org/stories/2025/06/sustainable-data-centre-heating/

Data centres, the essential backbone of our increasingly generative AI world, consume vast amounts of electricity and produce significant amounts of heat.

Countries, especially in Europe, are pioneering the reuse of this waste heat to power homes and businesses in the local area.

As the chart above shows, the United States has by far the most data centres in the world. So many, in fact, the US Energy Information Administration recently announced that these facilities will push the country’s electricity consumption to record highs this year and next. The US is not, however, at the forefront of waste heat adoption. Europe, and particularly the Nordic countries, are instead blazing a trail.

That may be a more-useful strategy in Europe, where a greater proportion of energy is — presently, as I don’t know what will be the case in a warming world — expended on heating than on air conditioning, unlike in the United States. That being said, one also requires sufficient residential population density to make effective use of district heating. And in the UK, there are probably few places that would make use of year-round heating, so only part of the waste heat is utilized.

looks further

The page I linked to mentions that this is something that London — which has many datacenters — is apparently already doing:

And in the UK, the Mayor of London recently announced plans for a new district heat network in the west of the city, expected to heat over 9,000 homes via local data centres.

I mean, I’m listing it because I believe that it’s something that has some value that could be done with the information. But it’s a “are the benefits worth the costs” thing? let’s say that you need to pay $800 and wear a specific set of glasses everywhere. Gotta maintain a charge on them. And while they’re maybe discrete compared to a smartphone, I assume that people in a role where they’re prominent (diplomacy, business deal-cutting, etc) probably know what they look like and do, so I imagine that any relationship-building that might come from showing that you can remember someone’s name and personal details (“how are Margaret and the kids?”) would likely be somewhat undermined if they know that you’re walking around with the equivalent of your Rolodex in front of your eyeballs. Plus, some people might not like others running around with recording gear (especially in some of the roles listed).

I’m sure that there are a nonzero number of people who would wear them, but I’m hesitant to believe that as they exist today, they’d be a major success.

I think that some of the people who are building some of these things grew up with Snow Crash and it was an influence on them. Google went out and made Google Earth; Snow Crash had a piece of software called Earth that did more-or-less the same thing (albeit with more layers and data sources than Google Earth does today). Snow Crash had the Metaverse with VR goggles and such; Zuckerberg very badly wanted to make it real, and made a VR world and VR hardware and called it the Metaverse. Snow Crash predicts people wearing augmented reality gear, but also talks about some of the social issues inherent with doing so; it didn’t expect everyone to start running around with them:

Someone in this overpass, somewhere, is bouncing a laser beam off Hiro’s face. It’s annoying. Without being too obvious about it, he changes his course slightly, wanders over to a point downwind of a trash fire that’s burning in a steel drum. Now he’s standing in the middle of a plume of diluted smoke that he can smell but can’t quite see.

It’s a gargoyle, standing in the dimness next to a shanty. Just in case he’s not already conspicuous enough, he’s wearing a suit. Hiro starts walking toward him. Gargoyles represent the embarrassing side of the Central Intelligence Corporation. Instead of using laptops, they wear their computers on their bodies, broken up into separate modules that hang on the waist, on the back, on the headset. They serve as human surveillance devices, recording everything that happens around them. Nothing looks stupider, these getups are the modern-day equivalent of the slide-rule scabbard or the calculator pouch on the belt, marking the user as belonging to a class that is at once above and far below human society. They are a boon to Hiro because they embody the worst stereotype of the CIC stringer. They draw all of the attention. The payoff for this self-imposed ostracism is that you can be in the Metaverse all the time, and gather intelligence all the time.

The CIC brass can’t stand these guys because they upload staggering quantities of useless information to the database, on the off chance that some of it will eventually be useful. It’s like writing down the license number of every car you see on your way to work each morning, just in case one of them will be involved in a hit-and-run accident. Even the CIC database can only hold so much garbage. So, usually, these habitual gargoyles get kicked out of CIC before too long.

This guy hasn’t been kicked out yet. And to judge from the quality of his equipment – which is very expensive – he’s been at it for a while. So he must be pretty good.

If so, what’s he doing hanging around this place?

“Hiro Protagonist,” the gargoyle says as Hiro finally tracks him down in the darkness beside a shanty. “CIC stringer for eleven months. Specializing in the Industry. Former hacker, security guard, pizza deliverer, concert promoter.” He sort of mumbles it, not wanting Hiro to waste his time reciting a bunch of known facts.

The laser that kept jabbing Hiro in the eye was shot out of this guy’s computer, from a peripheral device that sits above his goggles in the middle of his forehead. A long-range retinal scanner. If you turn toward him with your eyes open, the laser shoots out, penetrates your iris, tenderest of sphincters, and scans your retina. The results are shot back to CIC, which has a database of several tens of millions of scanned retinas. Within a few seconds, if you’re in the database already, the owner finds out who you are. If you’re not already in the database, well, you are now.

Of course, the user has to have access privileges. And once he gets your identity, he has to have more access privileges to find out personal information about you. This guy, apparently, has a lot of access privileges. A lot more than Hiro.

“Name’s Lagos,” the gargoyle says.

So this is the guy. Hiro considers asking him what the hell he’s doing here. He’d love to take him out for a drink, talk to him about how the Librarian was coded. But he’s pissed off. Lagos is being rude to him (gargoyles are rude by definition).

“You here on the Raven thing? Or just that fuzz-grunge tip you’ve been working on for the last, uh, thirty-six days approximately?” Lagos says.

Gargoyles are no fun to talk to. They never finish a sentence. They are adrift in a laser-drawn world, scanning retinas in all directions, doing background checks on everyone within a thousand yards, seeing everything in visual light, infrared, millimeter wave radar, and ultrasound all at once. You think they’re talking to you, but they’re actually poring over the credit record of some stranger on the other side of the room, or identifying the make and model of airplanes flying overhead. For all he knows, Lagos is standing there measuring the length of Hiro’s cock through his trousers while they pretend to make conversation.

I think that Stephenson probably did a reasonable job there of highlighting some of the likely social issues that come with having wearable computers with always-active sensors running.

It’s not clear to me whether-or-not the display is fundamentally different from past versions, but if not, it’s a relatively-low-resolution display on one eye (600x600). That’s not really something you’d use as a general monitor replacement.

The problem is really that what they have to do is come up with software that makes the user want to glance at something frequently (or maybe unobtrusively) enough that they don’t want to have their phone out.

A phone has a generally-more-capable input system, more battery, a display that is for most-purposes superior, and doesn’t require being on your face all the time you use it.

I’m not saying that there aren’t applications. But to me, most applications look like smartwatch things, and smartwatches haven’t really taken the world by storm. Just not enough benefit to having a second computing device strapped onto you when you’re already carrying a phone.

Say someone messages multiple people a lot and can’t afford to have sound playing and they need to be moving around, so can’t have their phone on a desk in front of them with the display visible or something, so that they can get a visual indicator of an incoming message and who it’s from. That could provide some utility, but I think that for the vast majority of people, it’s just not enough of a use case to warrant wearing the thing if you’ve already got a smartphone.

My guess is that the reason that you’d use something like this specific product, which has a camera on the thing and limited (compared to, say, XREAL’s options) display capabilities, so isn’t really geared up for AR applications where you’re overlaying data all over everything you see, is to try to pull up a small amount of information about whoever you’re looking at, like doing facial recognition to remember (avoid a bit of social awkwardness) or obtain someone’s name. Maybe there are people for whom that’s worthwhile, but the market just seems pretty limited to me for that.

I think that maybe there’s a world where we want to have more battery power and/or compute capability with us than an all-in-one smartphone will handle, and so we separate display and input devices and have some sort of wireless commmunication between them. This product has already been split into two components, a wristband and glasses. In theory, you could have a belt-mounted, purse-contained, or backpack-contained computer with a separate display and input device, which could provide for more-capable systems without needing to be holding a heavy system up. I’m willing to believe that the “multi-component wearable computer” could be a thing. We’re already there to a limited degree with Bluetooth headsets/earpieces. But I don’t really think that we’re at that world more-broadly.

For any product, I just have to ask — what’s the benefit it provides me with? What is the use case? Who wants to use it?

If you get one, it’s $800. It provides you with a different input mechanism than a smartphone, which might be useful for certain applications, though I think is less-generally useful. It provides you with a (low-resolution, monocular, unless this generation has changed) HUD that’s always visible, which a user may be able to check more-discretely than a smartphone. It has a camera always out. For it to make sense as a product, I think that there has to be some pretty clear, compelling application that leverages those characteristics.

rsyncis pretty fast, frankly. Once it’s run once, if you have-aor-tpassed, it’ll synchronize mtimes. If the modification time and filesize matches, by default,rsyncwon’t look at a file further, so subsequent runs will be pretty fast. You can’t really beat that for speed unless you have some sort of monitoring system in place (like, filesystem-level support for identifying modifications).